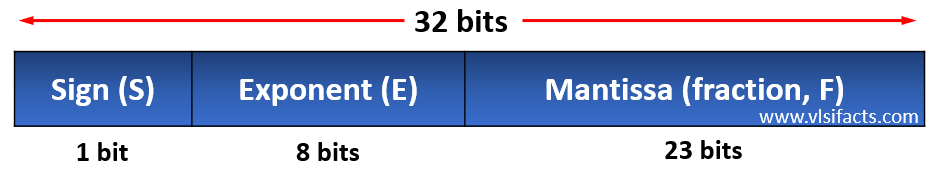

In the previous article, we got an overall idea about the representation of floating point number. In this article, we will specifically focus on the single-precision IEEE 754 representation of floating point numbers. Single precision format represents any floating point number in 32 bits. The following figure shows all the parts of the single precision representation.

As shown in the above figure, the single-precision representation has 1 bit sign (S), 8 bits exponent (E), and 23 bits mantissa (F). Let’s consider one example binary number to understand each of the three parts clearly.

Example: 1100011101010

We can write the above number in fractional binary numbers as below:

1100011101010 = 1.100011101010 x 212

Sign (S): If the number is positive, then the sign bit is ‘0’; else, if the number is negative, then the sign bit is ‘1’.

In this example, the number is positive, so the sign bit will be ‘0’. Just to clarify the confusion, the given binary number is not represented in any signed number format. It is represented by the way we write decimal numbers; negative numbers with a minus sign and positive numbers without any sign.

Exponent (E): The size of the exponent is 8 bits, and the range of exponent that can be represented in this case is from -126 to 128. This indicates that very large positive and negative numbers can be represented by using a single-precision floating point format. The exponent is represented as a biased exponent. The biased form of the exponent is derived by adding 127 to the original exponent. The objective of the exponent bias is to convert the exponent to always a positive number, and therefore we do not need another sign-bit for the exponent part.

In the above example, the biased exponent is 12 + 127 = 139. The exponent, now in binary form, is 10001011.

Mantissa (F): The representation of the mantissa part is a little tricky. The part after the binary point (the fractional part) is considered as the mantissa. It is assumed that there is always an ‘1’ before the binary point, and therefore the ‘1’ before the binary point is not accommodated in the 23 bits of mantissa. This means that the ‘1’ before the binary point does not consume any actual bit from the mantissa, but it is considered while computing the binary number back from the floating point representation of the number.

So, in this example, the 23 bits mantissa is derived from the fractional part only, that is .100011101010.

The complete floating point representation of the given number is as below:

Note that we have padded 0s to the right of the mantissa to make it 23 bits. This is because a mantissa is a fractional number, and 0s added to the right of the fractional number do not change the value of the number.

There are two exceptions to the floating point format. These two are for representing 0 and ∞ (infinity), which are shown below.

| +ve 0 = 0_00000000_00000000000000000000000 -ve 0 = 1_00000000_00000000000000000000000 +ve ∞ = 0_11111111_00000000000000000000000 -ve ∞ = 1_11111111_00000000000000000000000 |

Representation of 0 is done by keeping all exponent and mantissa bits 0. Representation of ∞ is done by keeping all exponent bits 1 and mantissa bits 0.

Now, the question is how to determine the value of a binary number that is represented in floating-point format. We will use the following formula for the same.

| Binary Number = (-1)S(1 + F)(2E-127) |

We will learn the determination of binary numbers using an example in the next article.